现在我们知道了如何编辑 Ceph CRUSH 映射,让我们了解 CRUSH 映射内部的内容。 CRUSH 映射文件包含四个主要部分;它们如下:

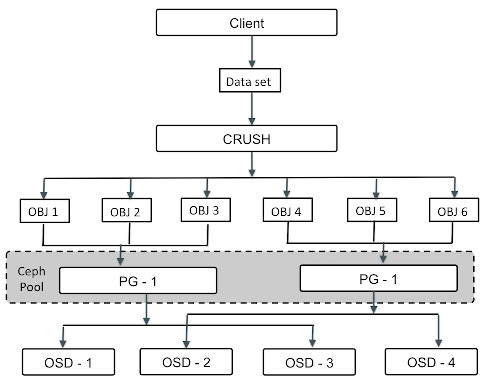

- Devices: This section of the CRUSH map keeps a list of all the OSD devices in your cluster. The OSD is a physical disk corresponding to the ceph-osd daemon. To map the PG to the OSD device, CRUSH requires a list of OSD devices. This list of devices appears in the beginning of the CRUSH map to declare the device in the CRUSH map. The following is the sample device list:

- Bucket types: This defines the types of buckets used in your CRUSH hierarchy. Buckets consist of a hierarchical aggregation of physical locations (for example, rows, racks, chassis, hosts, and so on) and their assigned weights. They facilitate a hierarchy of nodes and leaves, where the node bucket represents a physical location and can aggregate other nodes and leaves buckets under the hierarchy. The leaf bucket represents the ceph-osd daemon and its underlying physical device. The following table lists the default bucket types:

CRUSH 还支持自定义桶类型创建。这些默认存储桶类型可以删除,也可以根据需要引入新类型。

- Bucket instances: Once you define bucket types, you must declare bucket instances for your hosts. A bucket instance requires the bucket type, a unique name (string), a unique ID expressed as a negative integer, a weight relative to the total capacity of its item, a bucket algorithm (straw, by default), and the hash (0, by default, reflecting the CRUSH hash rjenkins1). A bucket may have one or more items, and these items may consist of other buckets or OSDs. The item should have a weight that reflects the relative weight of the item. The general syntax of a bucket type looks as follows:

现在我们将简要介绍 CRUSH 存储桶实例使用的参数:

-

- bucket-type: It's the type of bucket, where we must specify the OSD's location in the CRUSH hierarchy.

- bucket-name: A unique bucket name.

- id: The unique ID, expressed as a negative integer.

- weight: Ceph writes data evenly across the cluster disks, which helps in performance and better data distribution. This forces all the disks to participate in the cluster and make sure that all cluster disks are equally utilized, irrespective of their capacity. To do so, Ceph uses a weighting mechanism. CRUSH allocates weights to each OSD. The higher the weight of an OSD, the more physical storage capacity it will have. A weight is a relative difference between device capacities. We recommend using 1.00 as the relative weight for a 1 TB storage device. Similarly, a weight of 0.5 would represent approximately 500 GB, and a weight of 3.00 would represent approximately 3 TB.

- alg: Ceph supports multiple algorithm bucket types for your selection. These algorithms differ from each other on the basis of performance and reorganizational efficiency. Let's briefly cover these bucket types:

- uniform: The uniform bucket can be used if the storage devices have exactly the same weight. For non-uniform weights, this bucket type should not be used. The addition or removal of devices in this bucket type requires the complete reshuffling of data, which makes this bucket type less efficient.

- list: The list buckets aggregate their contents as linked lists and can contain storage devices with arbitrary weights. In the case of cluster expansion, new storage devices can be added to the head of a linked list with minimum data migration. However, storage device removal requires a significant amount of data movement. So, this bucket type is suitable for scenarios under which the addition of new devices to the cluster is extremely rare or non-existent. In addition, list buckets are efficient for small sets of items, but they may not be appropriate for large sets.

-

- tree: The tree buckets store their items in a binary tree. It is more efficient than list buckets because a bucket contains a larger set of items. Tree buckets are structured as a weighted binary search tree with items at the leaves. Each interior node knows the total weight of its left and right subtrees and is labeled according to a fixed strategy. The tree buckets are an all-around boon, providing excellent performance and decent reorganization efficiency.

-

- straw: To select an item using list and tree buckets, a limited number of hash values need to be calculated and compared by weight. They use a divide and conquer strategy, which gives precedence to certain items (for example, those at the beginning of a list). This improves the performance of the replica placement process, but it introduces moderate reorganization when bucket contents change due to addition, removal, or re-weighting.

straw 存储桶类型允许所有项目公平地竞争副本放置。在需要移除且重组效率至关重要的场景中,straw 存储桶提供子树之间的最佳迁移行为。这种存储桶类型允许所有项目通过类似于吸管的过程公平地竞争副本放置。

-

-

- straw2: This is an improved straw bucket that correctly avoids any data movement between items A and B, when neither A's nor B's weights are changed. In other words, if we adjust the weight of item C by adding a new device to it, or by removing it completely, the data movement will take place to or from C, never between other items in the bucket. Thus, the straw2 bucket algorithm reduces the amount of data migration required when changes are made to the cluster.

- hash: Each bucket uses a hash algorithm. Currently, Ceph supports rjenkins1. Enter 0 as your hash setting to select rjenkins1.

- item: A bucket may have one or more items. These items may consist of node buckets or leaves. Items may have a weight that reflects the relative weight of the item.

以下屏幕截图说明了 CRUSH 存储桶实例。在这里,我们有三个主机存储桶实例。这些主机存储桶实例由 OSD 存储桶组成:

- Rules: The CRUSH maps contain CRUSH rules that determine the data placement for pools. As the name suggests, these are the rules that define the pool properties and the way data gets stored in the pools. They define the replication and placement policy that allows CRUSH to store objects in a Ceph cluster. The default CRUSH map contains a rule for default pools, that is, rbd. The general syntax of a CRUSH rule looks as follows:

我们现在将简要介绍 CRUSH 规则使用的这些参数:

-

- ruleset: An integer value; it classifies a rule as belonging to a set of rules.

- type: A string value; it's the type of pool that is either replicated or erasure coded.

- min_size: An integer value; if a pool makes fewer replicas than this number, CRUSH will not select this rule.

- max_size: An integer value; if a pool makes more replicas than this number, CRUSH will not select this rule.

- step take: This takes a bucket name and begins iterating down the tree.

- step choose firstn <num> type <bucket-type>: This selects the number (N) of buckets of a given type, where the number (N) is usually the number of replicas in the pool (that is, pool size):

- If num == 0, select N buckets

- If num > 0 && < N, select num buckets

- If num < 0, select N - num buckets

例如:step choose firstn 1 type row

在本例中,num=1,假设池大小为3< /kbd>,然后 CRUSH

会将这个条件评估为 1 > 0&& < 3。因此,它将选择 1 行类型存储桶。

-

- step chooseleaf firstn <num> type <bucket-type>: This first selects a set of buckets of a bucket type, and then chooses the leaf node from the subtree of each bucket in the set of buckets. The number of buckets in the set (N) is usually the number of replicas in the pool:

- If num == 0, select N buckets

- If num > 0 && < N, select num buckets

- If num < 0, select N - num buckets

例如:step chooseleaf firstn 0 type row

在本例中,num=0,假设池大小为3,那么 CRUSH 会将这个条件评估为 0 == 0 ,然后选择一个行类型的桶集,使得该集合包含三个桶。然后它将从每个桶的子树中选择叶子节点。这样,CRUSH 会选择三个叶子节点。

-

- step emit: This first outputs the current value and empties the stack. This is typically used at the end of a rule, but it may also be used to form different trees in the same rule.