这个秘籍涉及配置 Nova 以将整个虚拟机存储在 Ceph RBD 上:

- Navigate to the [libvirt] section and add the following:

- Verify your changes:

- Restart the OpenStack Nova services:

- To boot a virtual machine in Ceph, the Glance image format must be RAW. We will use the same cirros image that we downloaded earlier in this chapter and convert this image from the QCOW to the RAW format (this is important). You can also use any other image, as long as it's in the RAW format:

- Create a Glance image using a RAW image:

- To test the boot from the Ceph volume feature, create a bootable volume:

- List Cinder volumes to check if the bootable field is true:

- Now, we have a bootable volume, which is stored on Ceph, so let's launch an instance with this volume:

- We have a known issue with qemu-kvm package which is causing nova boot to fail:

-

- We have the following packages installed in the os-node1 VM which have this issue:

-

- Please upgrade the qemu-kvm, qemu-kvm-common and qemu-img packages:

-

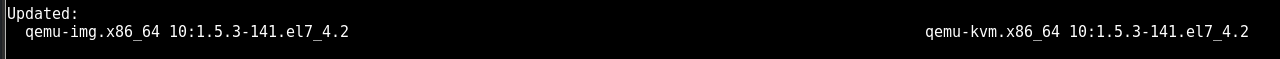

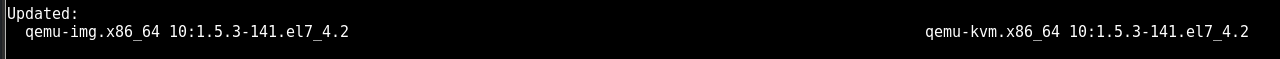

- It will install the following packages:

- Finally, check the instance status:

- At this point, we have an instance running from a Ceph volume. Let's do a boot from image:

- Finally, check the instance status:

- Check if the instance is stored in Ceph: